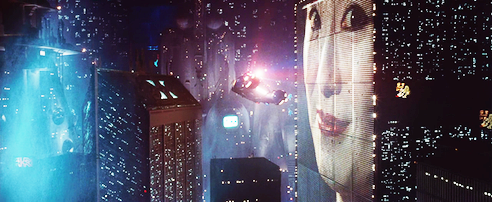

BLADE RUNNER: IS THIS YOUR FUTURE?

In Blade Runner, a 1982 science fiction movie, large corporations control nearly everything. The individual is almost powerless. It’s virtually impossible to hold anyone accountable for anything important, because decision makers are faceless and remote. Bureaucracy pervades every facet of life.

Some people argue that the hellish vision in Blade Runner is our future. Gigantic corporations will consolidate their control over our economic life.

Such predictions may seem to be credible. Certain corporate giants, such as Facebook and Google, threaten to acquire near monopolies in their markets- and in control of information. Microsoft, Apple, General Electric, and Exxon are still among the world’s largest firms. If present trends continue, can you keep your independence? Is a Blade Runner type of dystopia inevitable?

In the past, size was a decisive market advantage. Giant corporations owned infrastructure, industrial machines, and factories. They owned distribution networks. They could produce much more than smaller businesses could. Their expenses were spread over a larger number of units. It was much easier to organize production within one firm than among many. In the Machine Age, massive size made sense.

Is this true today? Will it be true in our future?

It might not be. In the Information Age, the advantage of size is not as great as before. Some of the means of production, previously out of reach for individuals and small businesses, are much more accessible. Anyone with the necessary skills can write a new app. With only a computer and a web connection, he can make and sell his products from home.

Bringing new industrial products to market is no longer the exclusive domain of corporate giants. With about $20,000, you could buy a router, a CNC machine, and a 3D printer, and they’d be almost as accurate as the ones owned by industrial giants. If you can’t afford your own machines, you can rent time on someone else’s. You could even rent a factory instead of building your own. This can be true of large scale production, not just product development. Some computer chip designers have been renting capacity in chip foundries owned by others.

The Blade Runner may not have been prophecy. For every centralizing economic trend, there is a decentralizing trend, so we are not doomed to a miserable future of domination by giant corporations. In the future, we may have greater control over our lives.

We will say more about this in another post.

(To take control of your economic future, you need a reliable internet connection. If you don’t have one, talk to us. We can help.)